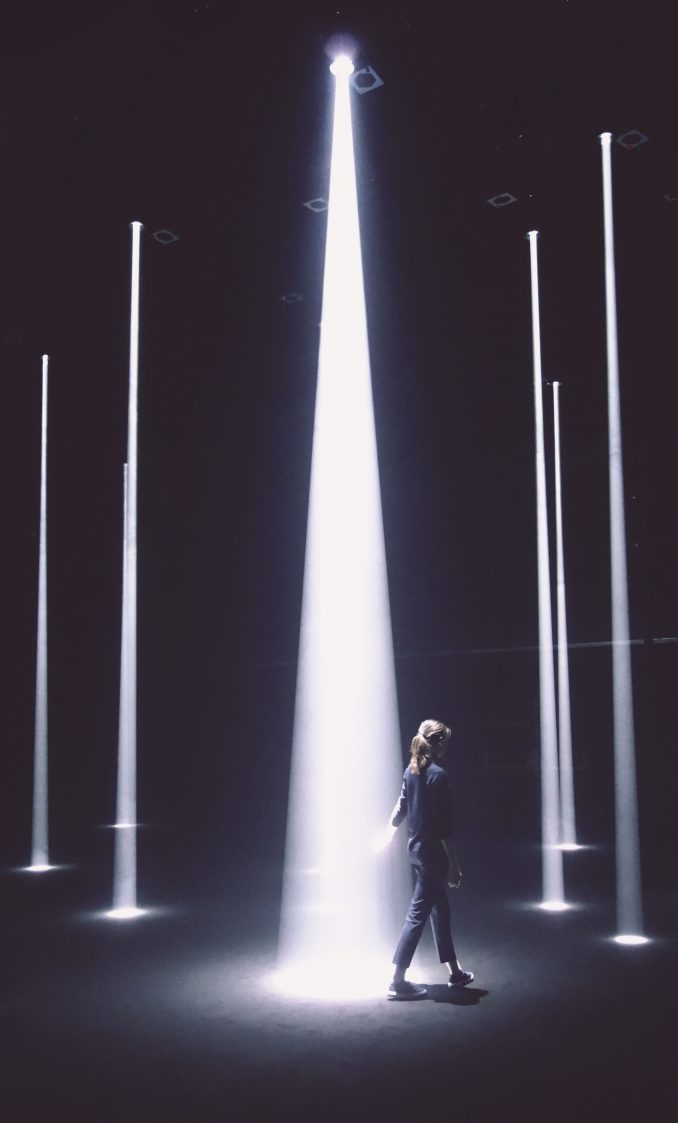

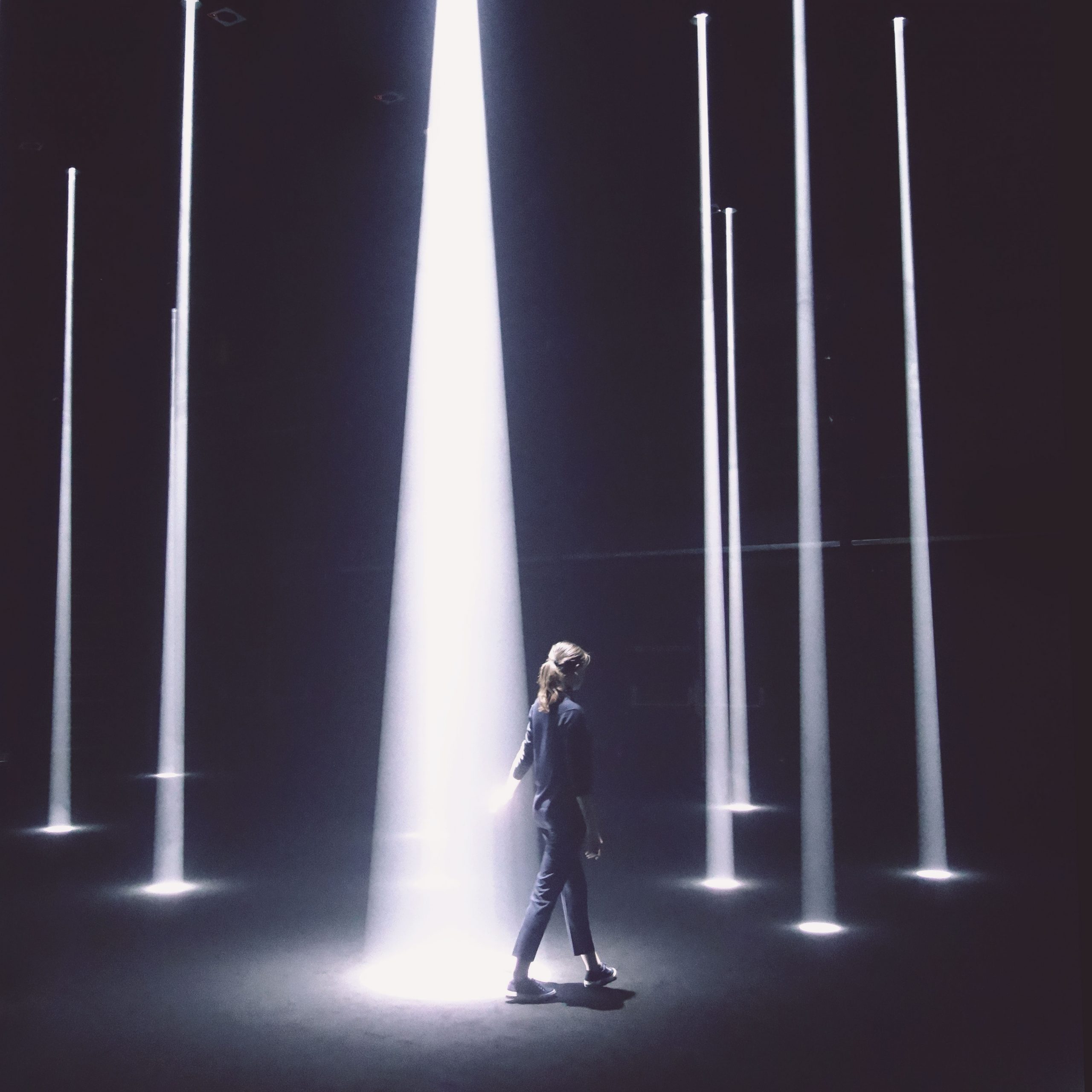

Passifolia is a monumental and interactive artistic installation, commissioned by Hermès.

Vertical beams of light shape the space. By touching a beam of light, the visitor opens and releases a bird song just above him. He feels the warmth of the light on his skin and the gentle sounds of nature surrounding him. Once the bird sound ends, the beam narrows down, inviting the visitor to continue his exploration. Once the bird sound ends, the beam narrows down, inviting the visitor to continue his exploration. A strange fauna draws a landscape that is both abstract and exuberant.

- How, across emptiness, to suggest lush fauna and flora?

- How to use sound and light vibrations to interfere with our bearings and our apprehension of space, while giving it a sense of materiality?

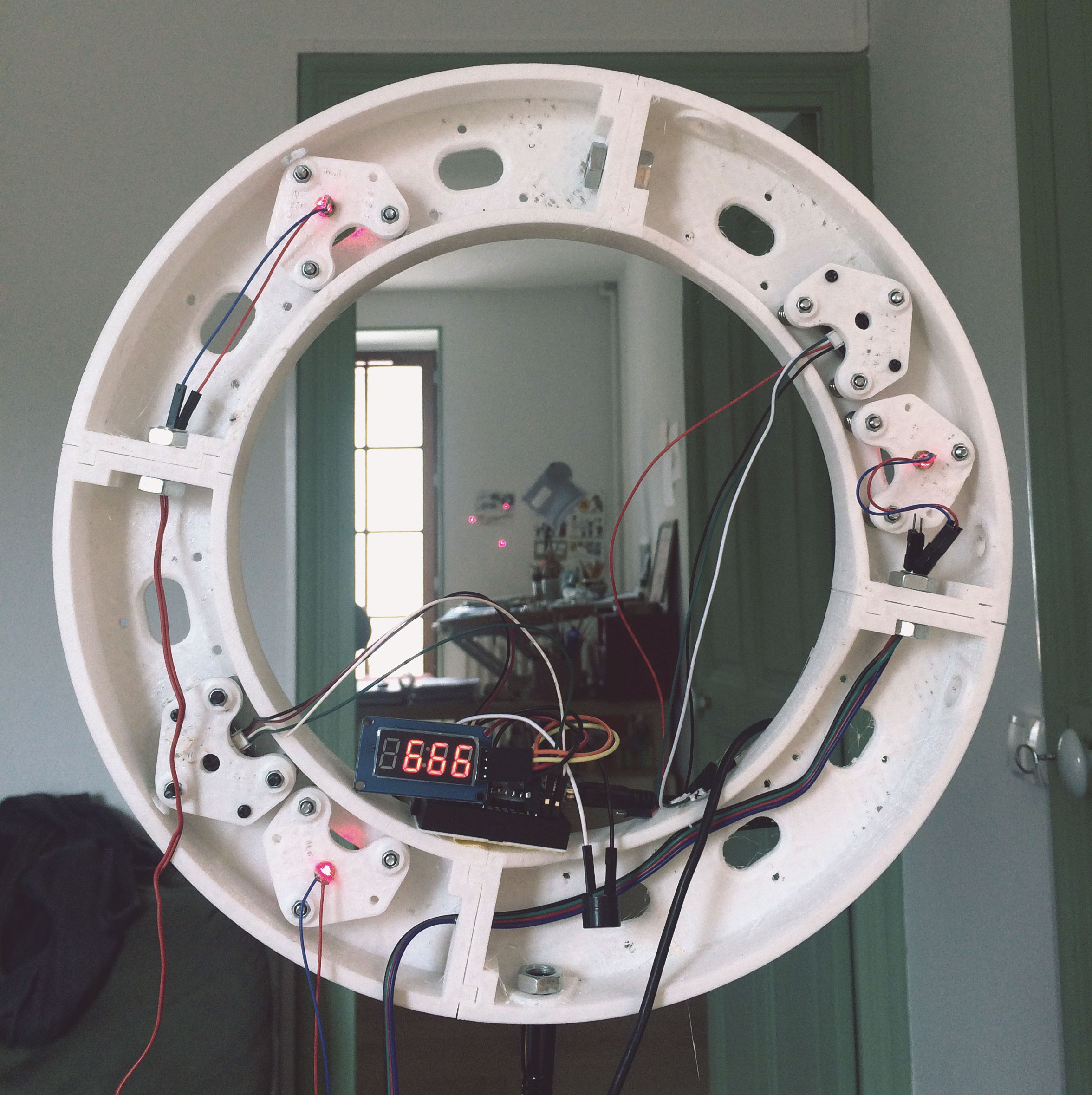

The piece is made of 16 interactive modules organically set up in space. The modules are each composed of an automated light, an ultra-directional speaker and a sensor system.

A gentle, immersive and evolutive melody are played by 12 ambient loudspeakers all around the space. The musical composition for the piece is made-to-measure by the musician Chapelier Fou. Hundred sounds of birds, squirrels, frogs, etc. recorded in the wild by naturalists are arranged by Chapelier Fou to create an ever-changing harmonious nature soundscape.

Passifolia is a collective experience, made-to-mesure for La Gaîté Lyrique in Paris (concert room of 20x15m floor space and 10m high ceiling).

Technology

Each light beam is produced by an automatic projector. The beam is visually materialized by artificial haze. An ultra-directional loudspeaker and a sensor are aligned with the beam.

The sensor consists of a camera, whose image is analysed on the fly by a dedicated program on a Raspberry Pi. Detection takes place in a harsh environment: low luminosity, haze, audio saturated by ultrasound, variable grip context… However, compared to other detection solutions (Lidar TOF, ultrasound…), video image analysis has several advantages: standard hardware, quick and easily adjustable sighting, analysis of a phenomenon visible to the naked eye easy to understand and monitor, wide spectrum of data that can be analysed (RGB or HSV values), detection area adjustable as needed, use of the diffraction properties of haze.

The image analysis software uses the OpenCV library. It detects when someone enters the light beam by studying the number of pixels whose brightness exceeds a predefined threshold. The program must be calibrated to correctly respond to a specific haze density which must remain constant throughout the event.

- When a detection is triggered, a signal containing the ID of the beam under which the detection is occurring is sent to a server.

- The server sends a Midi note corresponding to the beam ID to Ableton Live.

- When it receives the Midi note, Ableton Live does two things simultaneously:

- it broadcasts a particular sound sequence to the ultra-directional speaker corresponding to the location of the detection,

- it transmits the Midi note to the light console.

- The light console then triggers the opening sequence of the motorized projector under which the detection is made. The opening of the beam and the diffusion of the directional sound thus occur simultaneously.

Medium Automated lights, ultra-directional speakers, loud speakers, electronics, computers.

Authors Nicolas Guichard & Béatrice Lartigue – Lab212

Developpement Jonathan Blanchet – Lab212 & Nicolas Nolibos

Music Chapelier Fou

Light Mathieu Cabanes

Sound Baptiste Pohoski

Naturalist Marc Namblard

Commissionned by Hermès

Video ©Yannick Royo

Photos ©Lab212